Kernel I/O Subsystem

13.4 Kernel I/O Subsystem

13.4.1 I/O Scheduling

- Scheduling I/O requests can greatly improve overall efficiency. Priorities can also play a part in request scheduling.

- The classic example is the scheduling of disk accesses, as discussed in detail in chapter 12.

- Buffering and caching can also help, and can allow for more flexible scheduling options.

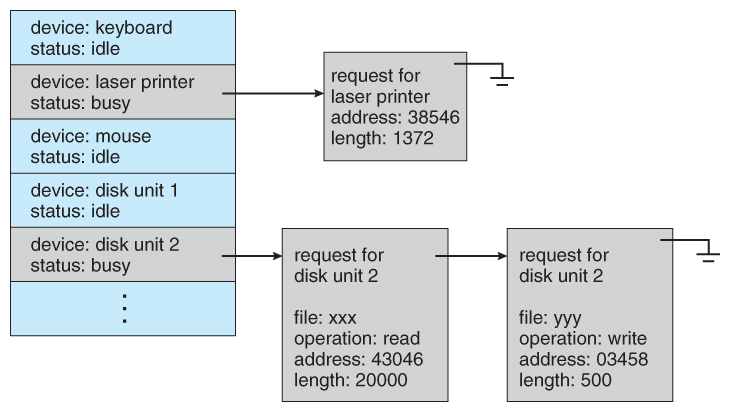

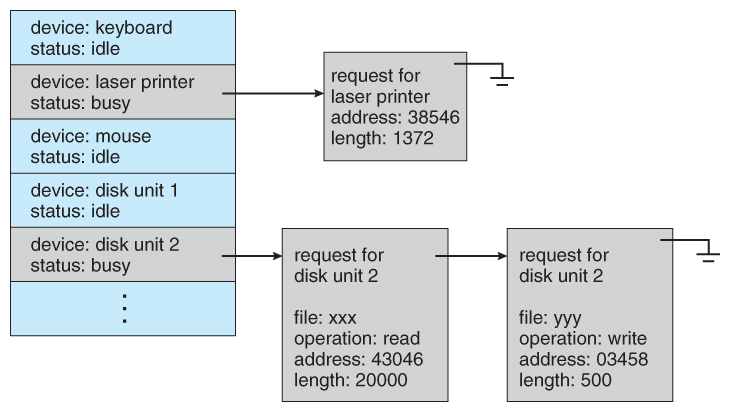

- On systems with many devices, separate request queues are often kept for each device:

Figure 13.9 - Device-status table.

Figure 13.9 - Device-status table.

13.4.2 Buffering

- Buffering of I/O is performed for ( at least ) 3 major reasons:

- Speed differences between two devices. ( See Figure 13.10 below. ) A slow device may write data into a buffer, and when the buffer is full, the entire buffer is sent to the fast device all at once. So that the slow device still has somewhere to write while this is going on, a second buffer is used, and the two buffers alternate as each becomes full. This is known as double buffering. ( Double buffering is often used in ( animated ) graphics, so that one screen image can be generated in a buffer while the other ( completed ) buffer is displayed on the screen. This prevents the user from ever seeing any half-finished screen images. )

- Data transfer size differences. Buffers are used in particular in networking systems to break messages up into smaller packets for transfer, and then for re-assembly at the receiving side.

- To support copy semantics. For example, when an application makes a request for a disk write, the data is copied from the user's memory area into a kernel buffer. Now the application can change their copy of the data, but the data which eventually gets written out to disk is the version of the data at the time the write request was made.

Figure 13.10 - Sun Enterprise 6000 device-transfer rates ( logarithmic ).

Figure 13.10 - Sun Enterprise 6000 device-transfer rates ( logarithmic ).

13.4.3 Caching

- Caching involves keeping a copy of data in a faster-access location than where the data is normally stored.

- Buffering and caching are very similar, except that a buffer may hold the only copy of a given data item, whereas a cache is just a duplicate copy of some other data stored elsewhere.

- Buffering and caching go hand-in-hand, and often the same storage space may be used for both purposes. For example, after a buffer is written to disk, then the copy in memory can be used as a cached copy, (until that buffer is needed for other purposes. )

13.4.4 Spooling and Device Reservation

- A spool ( Simultaneous Peripheral Operations On-Line ) buffers data for ( peripheral ) devices such as printers that cannot support interleaved data streams.

- If multiple processes want to print at the same time, they each send their print data to files stored in the spool directory. When each file is closed, then the application sees that print job as complete, and the print scheduler sends each file to the appropriate printer one at a time.

- Support is provided for viewing the spool queues, removing jobs from the queues, moving jobs from one queue to another queue, and in some cases changing the priorities of jobs in the queues.

- Spool queues can be general ( any laser printer ) or specific ( printer number 42. )

- OSes can also provide support for processes to request / get exclusive access to a particular device, and/or to wait until a device becomes available.

13.4.5 Error Handling

- I/O requests can fail for many reasons, either transient ( buffers overflow ) or permanent ( disk crash ).

- I/O requests usually return an error bit ( or more ) indicating the problem. UNIX systems also set the global variable errno to one of a hundred or so well-defined values to indicate the specific error that has occurred. ( See errno.h for a complete listing, or man errno. )

- Some devices, such as SCSI devices, are capable of providing much more detailed information about errors, and even keep an on-board error log that can be requested by the host.

13.4.6 I/O Protection

- The I/O system must protect against either accidental or deliberate erroneous I/O.

- User applications are not allowed to perform I/O in user mode - All I/O requests are handled through system calls that must be performed in kernel mode.

- Memory mapped areas and I/O ports must be protected by the memory management system, but access to these areas cannot be totally denied to user programs. ( Video games and some other applications need to be able to write directly to video memory for optimal performance for example. ) Instead the memory protection system restricts access so that only one process at a time can access particular parts of memory, such as the portion of the screen memory corresponding to a particular window.

Figure 13.11 - Use of a system call to perform I/O.

Figure 13.11 - Use of a system call to perform I/O.

13.4.7 Kernel Data Structures

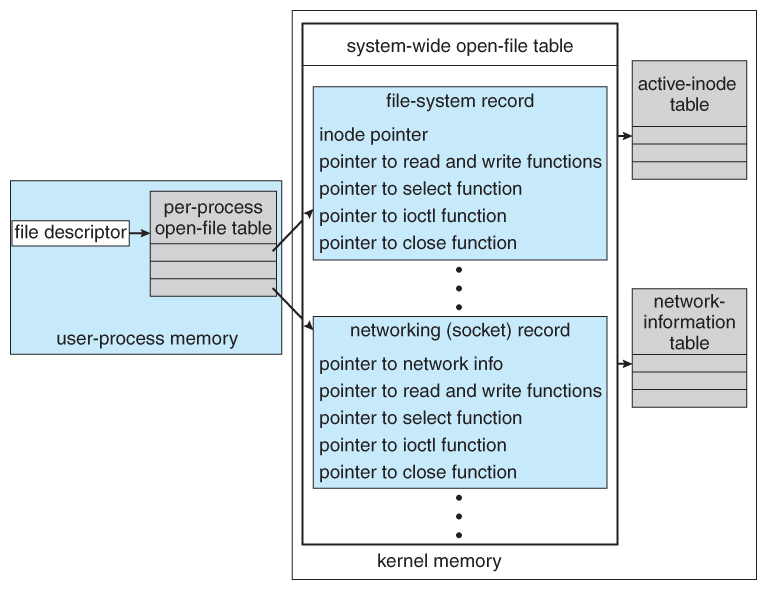

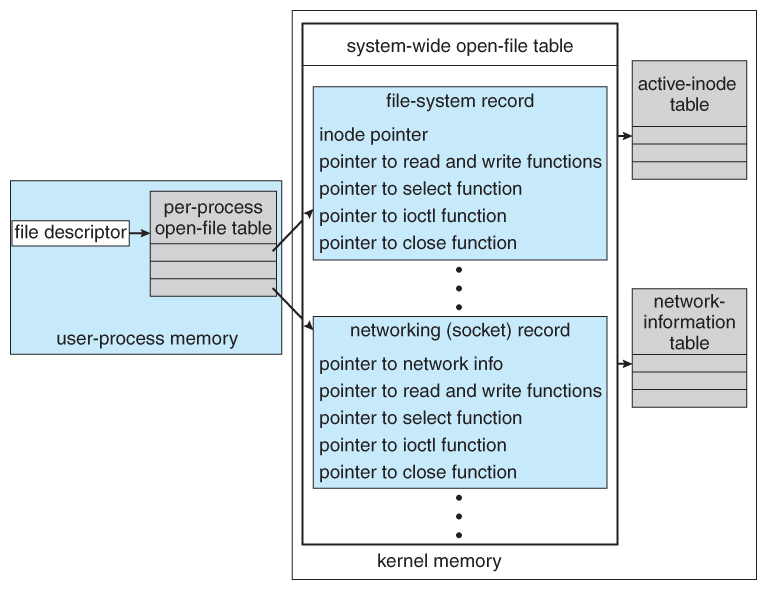

- The kernel maintains a number of important data structures pertaining to the I/O system, such as the open file table.

- These structures are object-oriented, and flexible to allow access to a wide variety of I/O devices through a common interface. ( See Figure 13.12 below. )

- Windows NT carries the object-orientation one step further, implementing I/O as a message-passing system from the source through various intermediaries to the device.

Figure 13.12 - UNIX I/O kernel structure.

Figure 13.12 - UNIX I/O kernel structure.

13.4.6 Kernel I/O Subsystem Summary